Use Case | 2025

Empowering Decisions with AI

INDUSTRY

Medical Devices

DURATION

3 Months

ROLE PLAYED

Design Lead and Strategist

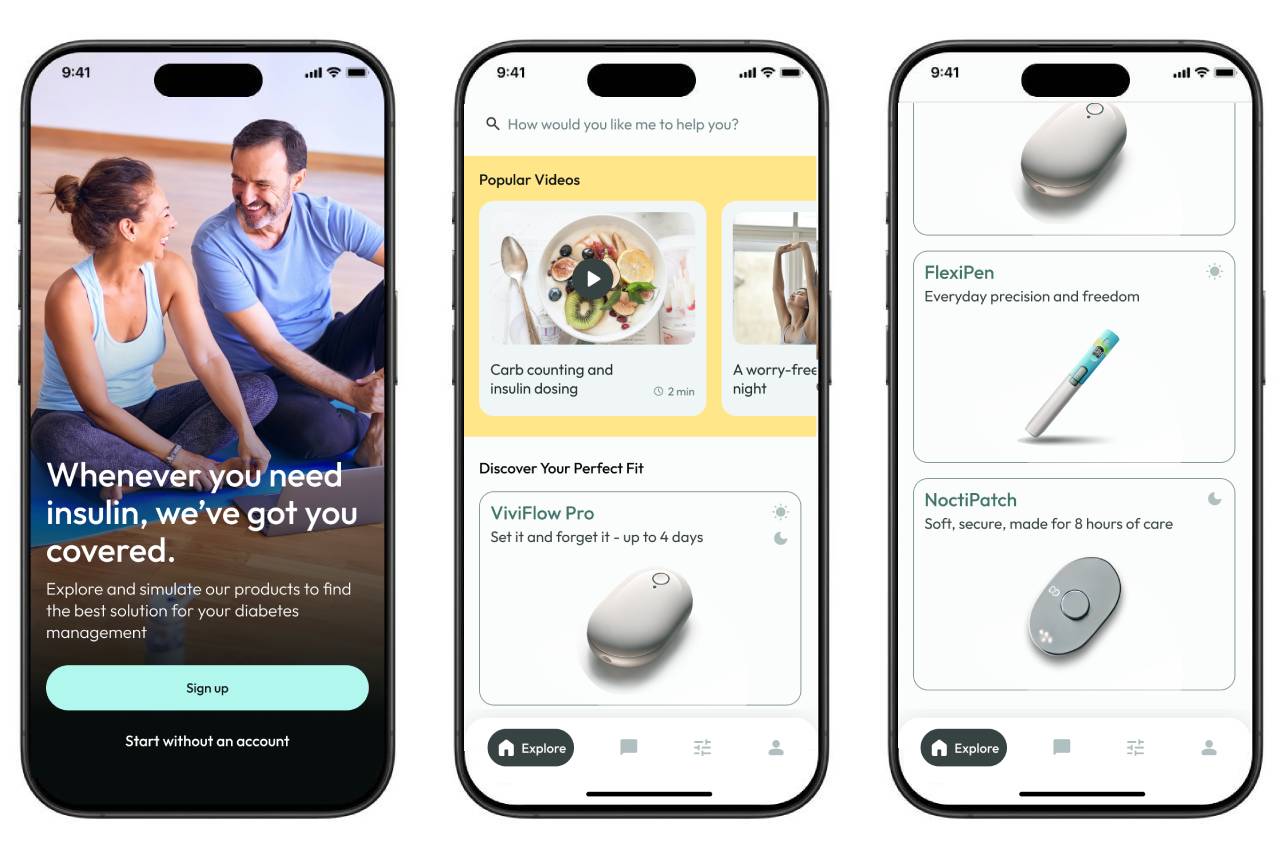

Leveraging LLM and AI to Simplify Product Adoption. A Collaborative Proof of Concept

Introduction

Navigating diabetes management solutions can be overwhelming, particularly for new users. The landscape includes a wide array of options—insulin pumps, pens, and continuous glucose monitors (CGMs)—many of which have overlapping features, even within the same brand. Key decisions are often made in environments where limited time constrain the exploration of different solutions, like a doctor’s office. Reaching a sales representative, the ideal resource, may not always be quick or straightforward enough to address very specific questions or concerns.

Recognizing these challenges, I proposed and led a collaborative proof of concept (PoC) to design an interactive app aimed at empowering users with better decision-making tools. As the design lead, I shaped the UX design processes and frameworks, driving alignment and working closely with a data science and content strategy teams. With input from an accessibility expert, we envisioned an app that would enable users to explore, compare, and simulate medical devices in a personalized, accessible, and user-friendly environment. This PoC prioritized understanding feasibility and potential value without committing to full implementation.

Note: This PoC was developed as part of an advanced training program in AI and healthcare at MIT, showcasing the potential of AI-driven solutions with real data and actual products. Details regarding the specific brand(s) involved have been omitted to adhere to confidentiality standards.

Outcome

The Proof of Concept demonstrated the potential for an AI-driven solution to close critical gaps in the initial stages of product decision-making. Key anticipated benefits include:

- Proved ability to enhance user confidence through personalized product comparisons.

- Gained deeper insights into user needs and expectations for future development.

- A structured and measurable UX approach including accessibility and multimodal features.

- Actionable insights for aligning AI capabilities with existing processes and channels.

- Clear roadmap for a pilot, outlining key milestones, metrics for success, and specific hypotheses about the user journey to validate.

Process

The PoC focused on identifying key pain points and exploring potential solutions using AI. Collaborating with a data science team was pivotal in ensuring that proposed features were grounded in realistic technological capabilities and aligned with user needs. This included brainstorming sessions to outline algorithms and prototype workflows, which lead to the identification of ethical and regulatory considerations.

- Investigate how AI, particularly Large Language Models (LLMs), could enhance the decision-making process for users.

- Prototype interactive features, such as personalized product comparisons, chat interfaces, and simulations, to validate their feasibility and value.

- Define metrics to measure success and evaluate the potential impact of such a tool.

Key Objectives:

Proposed Features and Methodologies

1. Product Comparisons

- Goal:

Simplify the decision-making process by allowing users to understand and compare devices through authentic use case scenarios provided by actual users.

- Approach:

Designed an interactive video feed to showcase product use in realistic scenarios, informed by user research data. Collaborated with a data science team to outline clustering algorithms (e.g., K-Means) for categorizing product recommendations based on user preferences and historical data.

The proposal included sourcing user data from anonymized datasets provided by existing operational records and enriched with synthesized data to fill gaps, ensuring no personally identifiable information was utilized. This approach would allow for realistic simulation of user preferences while maintaining compliance with GDPR and HIPAA regulations, avoiding the need for overly complex infrastructures.

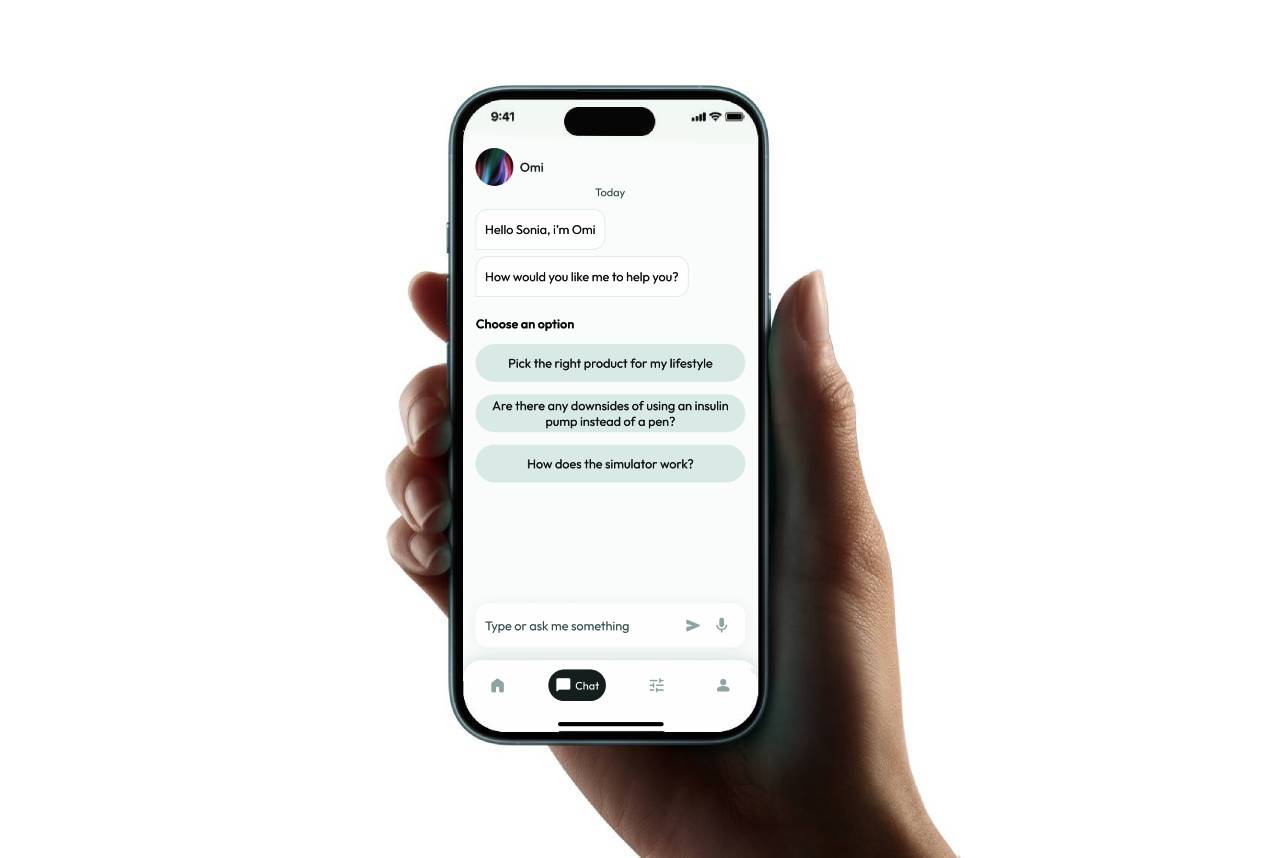

2. Chat Interface

- Goal:

Provide users with 24/7 access to basic troubleshooting and product guidance.

- Approach:

Evaluated two transformer-based LLM models for chatbot functionality: one optimized for cost-effectiveness with predefined responses and another trained for dynamic interactions using domain-specific data.

Prototyped fallback mechanisms, escalation paths, and multilingual support to ensure inclusivity and reliability. Created scripts for Wizard of Oz testing to simulate user interactions and refine conversational flows.

3. Simulations

- Goal:

Enable users to test how different devices might perform based on realistic data inputs.

- Approach:

Designed a streamline setup process that progressively asked users questions to reduce cognitive load, ensuring they could efficiently explore and compare results across various products without having to setup each product at the time. Assumptions included that all products shared the same core capabilities, such as the ability to calculate and deliver bolus insulin amounts based on specific data inputs, and that the simulators were previously tested to match the functionality of actual devices.

To mitigate potential risks such as data inaccuracies or biases, the use cases used for comparison were based on realistic input data, previously validated by a clinical team. This validation ensured alignment between simulated outputs and real-world device performance.

Prototyping and Validation

I developed a prototype that incorporated the core functionalities of the product, focusing on key features such as the video feed, the chatbot and the simulator setup. The usability testing was conducted with five participants—two users and three caregivers—representing diverse demographics and varying levels of tech literacy.

To facilitate the study, I combined the interactive mockups with a Wizard of Oz test for the chat component, providing a more realistic approach that allowed us to assess the chatbot’s conversational flow (scripts), gathering feedback from participants on the accuracy and relevance of its scripted responses. Tools like Voiceflow or Botmock are great to quickly build and mimic chatbot interactions, but for this test, we used a simple prototype based on an app similar to WhatsApp.

During a user testing session with a 75-year-old male participant from India, living with his wife (who helps him manage his diabetes), he asked:

"Do I use the chat like WhatsApp to talk to someone directly? Will I need an OTP?" "What are these questions here? Can I ask something else?”

This highlighted the importance of using tools that can realistically mimic the expected functionality, use localized, culturally aware content and responsive features tailored to individual lifestyles. However, it provided valuable insights, encouraging me to continue working closely with the data science team to evaluate users' needs and ensure alignment with the selected clustering and personalization algorithm.

Metrics

This PoC was focused on user needs and on setting the foundation for validating the effectiveness of AI tools. The success was measured using the following metrics:

Adoption and Engagement

- Feature exploration rates: Assessed which features users engaged with most frequently.

- Task success rate: Measured whether users were able to find the information they were seeking successfully.

- Session duration: Aimed to measure how long users interact with specific features.

Accuracy and User Satisfaction

- Chatbot response accuracy: Validated using feedback from the data science team and user ratings (binary like/dislike system).

- Simulation realism: Evaluated by comparing user feedback on expectations versus perceived accuracy of the simulated scenarios.

Regulatory and Ethical Feasibility

I prepared the necessary documentation for a compliance assessment with legal and compliance teams to ensure adherence to healthcare data standards, such as GDPR and HIPAA. This involved gathering relevant information from the data science team, reviewing data handling practices, and ensuring that all processes aligned with regulatory requirements to safeguard user data privacy.

Additionally, I collaborated with the data science team to evaluate potential biases in AI models, with a particular focus on large language model (LLM) outputs. Our goal was to identify and address any biases that could negatively impact users, such as making content accessible particularly if not presented by default, and clearly indicating when AI-driven features are not supported by a human interaction.

Key Learnings and Challenges

The following key learnings and challenges emerged from this PoC, offering insights into technical feasibility, user expectations, content scalability, and the overall value proposition for the product:

1. Technical Feasibility and User Expectations

- While clustering (video feed) and transformer-based (chat) AI models showed promise, their scalability and cost-effectiveness remain significant hurdles.

- Addressing token usage and ongoing training costs for transformers, highlighted the need for a balance between advanced functionality and operational efficiency.

-

User testing results revealed high expectations for personalization and accuracy, features that would require robust datasets and continuous optimization—a challenge given the constraints of a PoC.

- On the video feed, users asked about loading times, requested clearer labels, and more relevant content. This feedback highlighted the scale of this feature and demonstrated that implementation could be ineffective without a clear strategy and existing presence on similar channels (e.g., YouTube).

- Some users found the chat responses effective, while others identified the content as "somehow irrelevant" or confusing. Navigation was also reported as unclear for some, but all participants were able to complete the tasks.

2. Content Scalability:

-

It is essential to have a scalable content strategy to maintain engagement without exceeding operational costs, including:

- Optimizing and labeling existing content (internal and influencer-built) to match required formats (size and length).

- Create new, high-quality branded content that is reusable across multiple channels.

- Long-term, include open-source materials, engage new influencers, and provide templates for quick content creation.

- Separate content for healthcare training and engagement purposes, addressing testing and risk management differently.

- Use end-to-end metrics to refine messaging, adjust engagement tactics, and ensure content relevance across channels.

3. Value Proposition

- Based on projections from this PoC (after incorporating usability research findings), the app is expected to increase conversions and reduce customer support efforts. With at least 50% of queries potentially resolved by the chatbot (based on Wizard of Oz testing), time spent by sales representatives could be significantly reduced.

- Initial findings revealed that four out of five participants understood the different devices without needing assistance, indicating that the app could help users select the right device, reducing product returns and replacements.

- According to IBM Watson AI reports, it’s estimated that a product like this and as user adoption grows, the need for additional support staff could decrease by 10%-30%, with a 25% increase in self-service resolution rates.

- Incorporating this app into the onboarding process could minimize the need for follow-up calls with doctors (after conversion), resulting in a higher prescription rate.

Conclusion:

As the UX lead for this PoC, I focused on the importance of aligning the design strategy with feasibility while ensuring the user needs remained at the forefront, anticipating the impact on critical metrics such as support costs and user adoption rates.

Using benchmarks from similar tools in combination with the usability testing (due to the small sample size of five participants, which limits the generalizability of the findings), we estimated that the app could reduce support costs by up to 30%, improve user confidence, and drive increased adoption rates, along with a reduction in product returns or replacements. These projections provide a solid foundation for advancing to a pilot phase with targeted approaches, such as leveraging open-source LLMs or hybrid solutions.

Additionally, by collaborating closely with the data science team, we demonstrated the technical and regulatory feasibility of features such as personalized comparisons, chat interfaces, and simulations. While significant challenges remain, particularly in scalability, cost management, and addressing edge cases (e.g., users with low digital literacy), the insights gained from this exploration provide a strong foundation for future development prioritization. Ultimately, the PoC highlighted the importance of grounding ambitious technological solutions in user needs (both present and future), ethical and legal considerations.